In this blog i will explore some of waiting strategy for inter thread communication.

BlockingQueue is integral part of many concurrency framework including ExecutorService of Java.

Some of concrete implementation of BlockingQueue are ArrayBlockingQueue , LinkedBlockingQueue.

These queues are extensively used for inter thread communication, one of the complex aspect of blocking queue is Waiting Strategy.

Some of Waiting Strategy

- Sleep Based - This is most naive way, sometime it can be best fit

- Spin Based - This is best when you know cost of context switch is more than spin, good fit if you have have free core available.

- Hybrid ( Spin & Sleep both) - Hope to get best of both world!

- Lock based - Classic wait/notify type, JDK 5 has simpler API to achieve this using Condition. Locks & Condition has has hidden all the complexity required for inter thread communication. It is used in ArrayBlockingQueue, LinkedBlockingQueue and many more place in JDK

ArrayBlockingQueue Vs LinkedBlockingQueue

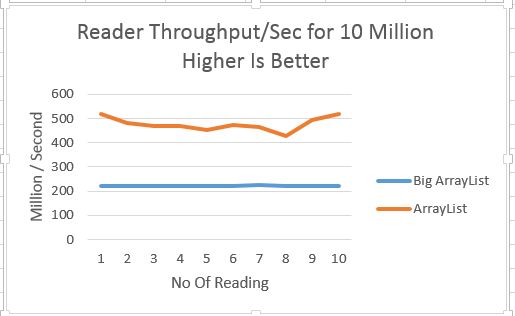

Both type of queue is using lock based waiting strategy but ArrayBlockingQueue is must faster as compared LinkedBlockingQueue because it is using Array as the underlying data structure.

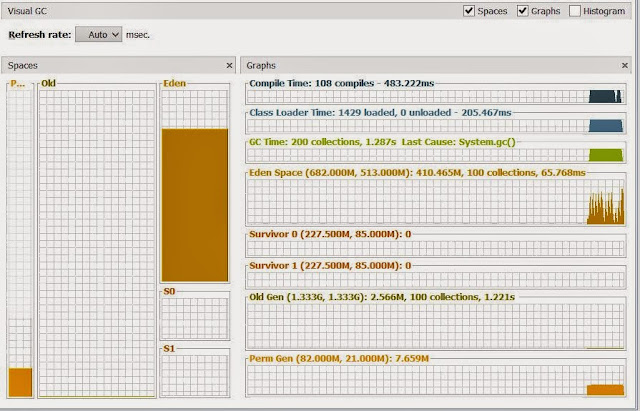

- It does all the memory allocation up front, so little less work for GC.

- Linear memory access as compared to LinkedList. I shared my finding with cache friendly linked list in linked-list-revisited-for-in-memory blog

Throughput of Queues

Lets look at Throughput numbers for some queues. In this test i add 50 Million message to queue and 1 Producer & 1 Consumer is used.

I am using 3 types of queue in this test

- LinkedBlockingQueue

- ArrayBlockingQueue

- ConcurrentLinkedQueue - This is Lock Free queue , it is interesting to see number of this queue,since this is not a blocking queue, so i will be using Spin based waiting strategy on consumer side

Another reason to include CLQ is later i will be using different waiting strategy with this queue to make it blocking on consumer side.

Y Axis - Throughput in 100K/Second

X- Axis - Type of Queue

Numbers looks as expected, concurrent linked queue is best

Now i will add some proper wait strategy to ConcurrentLinkedQueue and measure performance

- Lock Based - This one is similar to what ArrayBlockingQueue is using

- Park Based - This is custom wait strategy inspired from Conditions of Lock, it is bit different than Lock

Throughput - Comparison

Y Axis - Throughput in 100K/Second

X- Axis - Type of Queue

Some description about labels

Con Spin - ConurrentLinkedQueue using Spin

Con Lock- ConurrentLinkedQueue using Lock (i.e using Condition)

Con Park- ConurrentLinkedQueue using explicit thread parking

ConcurrentLinkedQueue using Lock based strategy suffers , performance of the queue is similar to ArrayBlockingQueue, so definitely not is good idea to to use Locks because all the benifit of LockFree queue is gone as Locks are introduced.

The one using custom Park that i described above out performs, it is around 150% faster than ArrayBlocking & around 13% faster than Spin based concurrent queue.

Executors Using ConcurrentLinkedQueue

Park based strategy which is like Condtions in JDK but without overhead of Lock seems better choice, but before we conclude anything , lets look at another test result.

I performed test on Executors by using different types of queue(ArrayBlockingQueue,ConcurrentBlocking With Park)

I also included WorkStealingPool to test which is included in JDK 8.

In this test 1 Million message is added by each producer to ExecutorService and slowly number of producer & threads in executor is increased.

Executor using ConcurrentLinkedQueue with Park Wait strategy seems best and in some case it is better than ForkJoinPool especially when number of Producer are increased.

Conclusion

Waiting strategy is complex, different use case might need different strategy to get best performance. This blog shows one of strategy which seems better than Lock based strategy that we have in JDK.

Code is available @ github

BlockingQueue is integral part of many concurrency framework including ExecutorService of Java.

Some of concrete implementation of BlockingQueue are ArrayBlockingQueue , LinkedBlockingQueue.

These queues are extensively used for inter thread communication, one of the complex aspect of blocking queue is Waiting Strategy.

Some of Waiting Strategy

- Sleep Based - This is most naive way, sometime it can be best fit

- Spin Based - This is best when you know cost of context switch is more than spin, good fit if you have have free core available.

- Hybrid ( Spin & Sleep both) - Hope to get best of both world!

- Lock based - Classic wait/notify type, JDK 5 has simpler API to achieve this using Condition. Locks & Condition has has hidden all the complexity required for inter thread communication. It is used in ArrayBlockingQueue, LinkedBlockingQueue and many more place in JDK

ArrayBlockingQueue Vs LinkedBlockingQueue

Both type of queue is using lock based waiting strategy but ArrayBlockingQueue is must faster as compared LinkedBlockingQueue because it is using Array as the underlying data structure.

- It does all the memory allocation up front, so little less work for GC.

- Linear memory access as compared to LinkedList. I shared my finding with cache friendly linked list in linked-list-revisited-for-in-memory blog

Throughput of Queues

Lets look at Throughput numbers for some queues. In this test i add 50 Million message to queue and 1 Producer & 1 Consumer is used.

I am using 3 types of queue in this test

- LinkedBlockingQueue

- ArrayBlockingQueue

- ConcurrentLinkedQueue - This is Lock Free queue , it is interesting to see number of this queue,since this is not a blocking queue, so i will be using Spin based waiting strategy on consumer side

Another reason to include CLQ is later i will be using different waiting strategy with this queue to make it blocking on consumer side.

Y Axis - Throughput in 100K/Second

X- Axis - Type of Queue

Numbers looks as expected, concurrent linked queue is best

Now i will add some proper wait strategy to ConcurrentLinkedQueue and measure performance

- Lock Based - This one is similar to what ArrayBlockingQueue is using

- Park Based - This is custom wait strategy inspired from Conditions of Lock, it is bit different than Lock

- Don't have to acquire lock to use it, so that overhead is removed

- Have to specify number of waiters, it is similar to Concurreny Level of Concurrent Hashmap, it will create slot for each waiter and that is used when thread is parked. In case if no slots is available then thread is parked for some time.

Throughput - Comparison

Y Axis - Throughput in 100K/Second

X- Axis - Type of Queue

Some description about labels

Con Spin - ConurrentLinkedQueue using Spin

Con Lock- ConurrentLinkedQueue using Lock (i.e using Condition)

Con Park- ConurrentLinkedQueue using explicit thread parking

ConcurrentLinkedQueue using Lock based strategy suffers , performance of the queue is similar to ArrayBlockingQueue, so definitely not is good idea to to use Locks because all the benifit of LockFree queue is gone as Locks are introduced.

The one using custom Park that i described above out performs, it is around 150% faster than ArrayBlocking & around 13% faster than Spin based concurrent queue.

Executors Using ConcurrentLinkedQueue

Park based strategy which is like Condtions in JDK but without overhead of Lock seems better choice, but before we conclude anything , lets look at another test result.

I performed test on Executors by using different types of queue(ArrayBlockingQueue,ConcurrentBlocking With Park)

I also included WorkStealingPool to test which is included in JDK 8.

In this test 1 Million message is added by each producer to ExecutorService and slowly number of producer & threads in executor is increased.

Executor using ConcurrentLinkedQueue with Park Wait strategy seems best and in some case it is better than ForkJoinPool especially when number of Producer are increased.

Conclusion

Waiting strategy is complex, different use case might need different strategy to get best performance. This blog shows one of strategy which seems better than Lock based strategy that we have in JDK.

Code is available @ github